The marriage of AI with telehealth was inevitable in the age of big data and neural networks. No one could have anticipated how profoundly it would alter the landscape of healthcare delivery.

On the second floor of Aravind Eye Hospital in Madurai, South India’s vibrant temple town, 60-year-old Muthuswamy Ramalingam rested his chin on the chin support of the desktop device, as instructed by the clinician. He looked right into the camera as it captured several scans of the back of his eye, the retina. After checking the quality of the images on his monitor, the clinician tapped the keyboard briefly. Then they both waited.

A few minutes later, the diagnosis appeared on the monitor and it was not good: according to the scans, Ramalingam, already diabetic, showed signs of diabetic retinopathy. The good news was that an ophthalmologist could help him stave off blindness with timely and appropriate interventions.

The remarkable thing about this moment was that no doctor had been involved in any part of the process. Instead, the scans had been fed to a remote artificial intelligence (AI) agent developed and trained by Google and its sister company Verily to spot diabetic retinopathy in retinal scans. Called Automated Retinal Disease Assessment (ARDA), the machine analyzes scans at warp speed with accuracy surpassing human doctors and sends the report back to the clinic. For India, a country with over 212 million diabetes patients — 25% of the world’s diabetic population — and only about 15 ophthalmologists per million people, that is a giant leap in healthcare.

Aravind Hospital was already a pioneer in using telemedicine to detect diabetic retinopathy in its early stages when it is asymptomatic and no one has eye doctors on their mind. “We screened them opportunistically when they visited the diabetologist or physician for their routine diabetic check-ups,” says Dr. Kim Ramaswamy, Chief Medical Officer at Aravind. They placed retinal cameras in several diabetology clinics in villages around Madurai and screened patients who were there for diabetic examinations. The images would be uploaded to Madurai’s main hospital, where expert ophthalmologists would evaluate them. The reports would be back at the clinic within two hours, usually in good time for the doctor to propose treatment options if needed.

Change started in 2013, when a Google team from Mountain View, U.S.A., inspired by Google research scientist Varun Gulshan, reached out to Aravind Hospital and Sankara Netralaya, another eye hospital in South India, for grading retinal scans that would be used to train an AI model. Before long, the model was competently identifying nerve tissue damage, swelling and bleeding, all key markers of diabetic neuropathy. Google dramatically expanded its dataset by enlisting the Eye Picture Archive Communication System (EyePACS), a U.S.-based telemedicine network that, like Aravind Hospital, connects patients in remote areas of the U.S. with ophthalmologists for diabetic retinopathy scans.

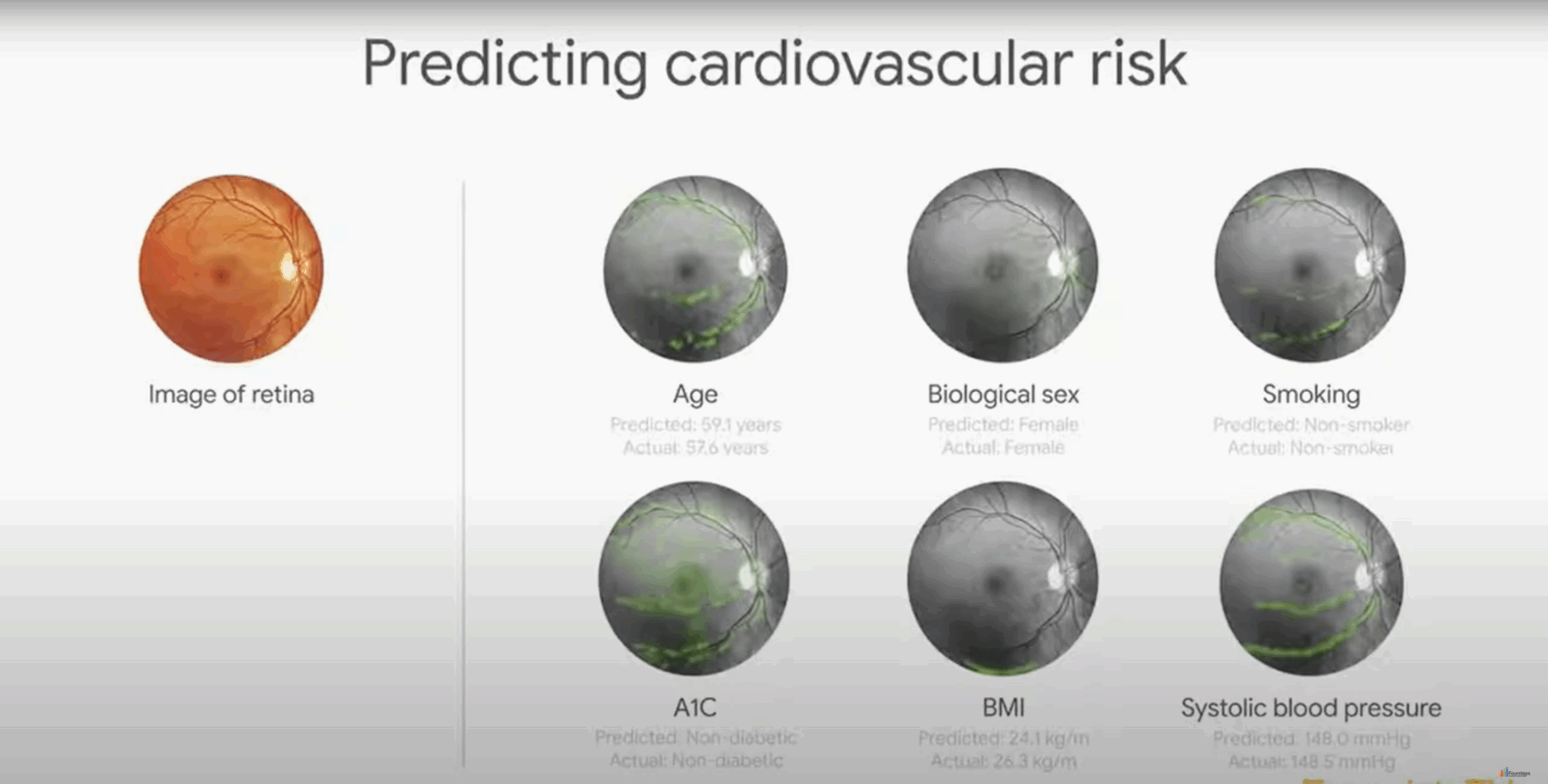

After over 100 ophthalmologists graded millions of retinal scans to train the AI model, ARDA was tested between April and July 2016. Aravind Hospital and Sankara Netralaya enlisted 3049 diabetic patients, and the AI diagnoses of their retinal scans were compared with manual diagnoses by one trained grader and one retina specialist at each site. ARDA’s algorithm consistently showed high accuracy, often performing on par with trained ophthalmologists both in sensitivity (correctly identifying cases) and specificity (avoiding false positives), making it a valuable tool for detecting diabetic neuropathy. Surprisingly, ARDA also delivered diagnoses no one had expected from the retinal scan, including the patient’s age, gender, whether they smoked or not, their body mass index and systolic blood pressure.

Google AI in Aravind Hospital

ARDA is powered by neural networks, the same technology that already drives face recognition services, talking digital assistants, driverless cars and instant translation. Neural networks digest vast amounts of data, detecting patterns and making connections with and without supervision. By studying millions of retinal scans, ARDA is now an expert in identifying the condition on its own. In early 2019, European regulators awarded the system CE certification for use under the Verily name; the USA’s Food and Drug Administration (FDA) followed soon after.

The number of patients we were diagnosing with diabetic retinopathy went up eightfold after we began using AI with ARDA,” says Dr. Ramaswamy. “We now deploy AI-powered diabetic retinopathy screening at many of our centers. This has freed up our retina specialists to go back to clinical work since they are no longer required for grading.”

The ARDA experiment is a stunning example of an emerging phenomenon — the confluence of artificial intelligence (AI) with telehealth and telemedicine. While telemedicine has a longer and more storied history, AI exploded into the public consciousness on November 30, 2022, with the launch of ChatGPT from the company OpenAI. The clunky name is derived from Generative Pre-trained Transformer (GPT), a deep learning model that uses a neural network architecture to generate human-like text by understanding and predicting sequences of words.

Trained on a vast diet of text data such as books, websites and articles, ChatGPT seems to correctly grasp language structures, grammar, facts, context and intent. It accomplishes this by processing billions of parameters at blinding speeds and drawing from vast datasets to provide insights, detect patterns in information, propose solutions or simply deal with everyday tasks.

When such formidable power is harnessed by telemedicine to serve communities for whom even finding a doctor is a challenge, it can revolutionize the way high-quality professional healthcare is accessed, and deliver lightning-fast diagnoses and top-class treatment options to patients right where they live.

A short history of long-distance healthcare

The guiding principle behind telemedicine is nearly as old as healthcare: if the patient cannot go to the doctor, the doctor must go to the patient. Home visits were a standard part of medical practice because the home was understood to be the hub of all testing, treatment, diagnosis and monitoring. Change came inevitably as growing urban populations increased the pressure on doctors, and specialized technology such as X-ray machines and magnetic resonance imaging (MRI) became common. In 2024, there were about 94 primary care physicians per 100,000 Americans, while the world’s most populous country, India, struggled with about 69 doctors per 100,000, and China stood at about 32 per 100,000. Telemedicine grew out of the need to provide timely and professional medical services to remote, underserved locations where face-to-face consultation with doctors was not possible.

Each leap forward in telemedicine was driven by an advance in telecommunication technology. The invention of the electric telegraph by Samuel Morse, who also developed the Morse code, led to the first text message to travel long distance: “What hath God wrought!” It was transmitted on April 24, 1844, from Washington D.C. to Baltimore, Maryland, a distance of 38.6 miles. Within a decade, about 15,000 miles of telegraph cable had been laid, making it possible to order medical supplies and report casualties remotely during the American Civil War.

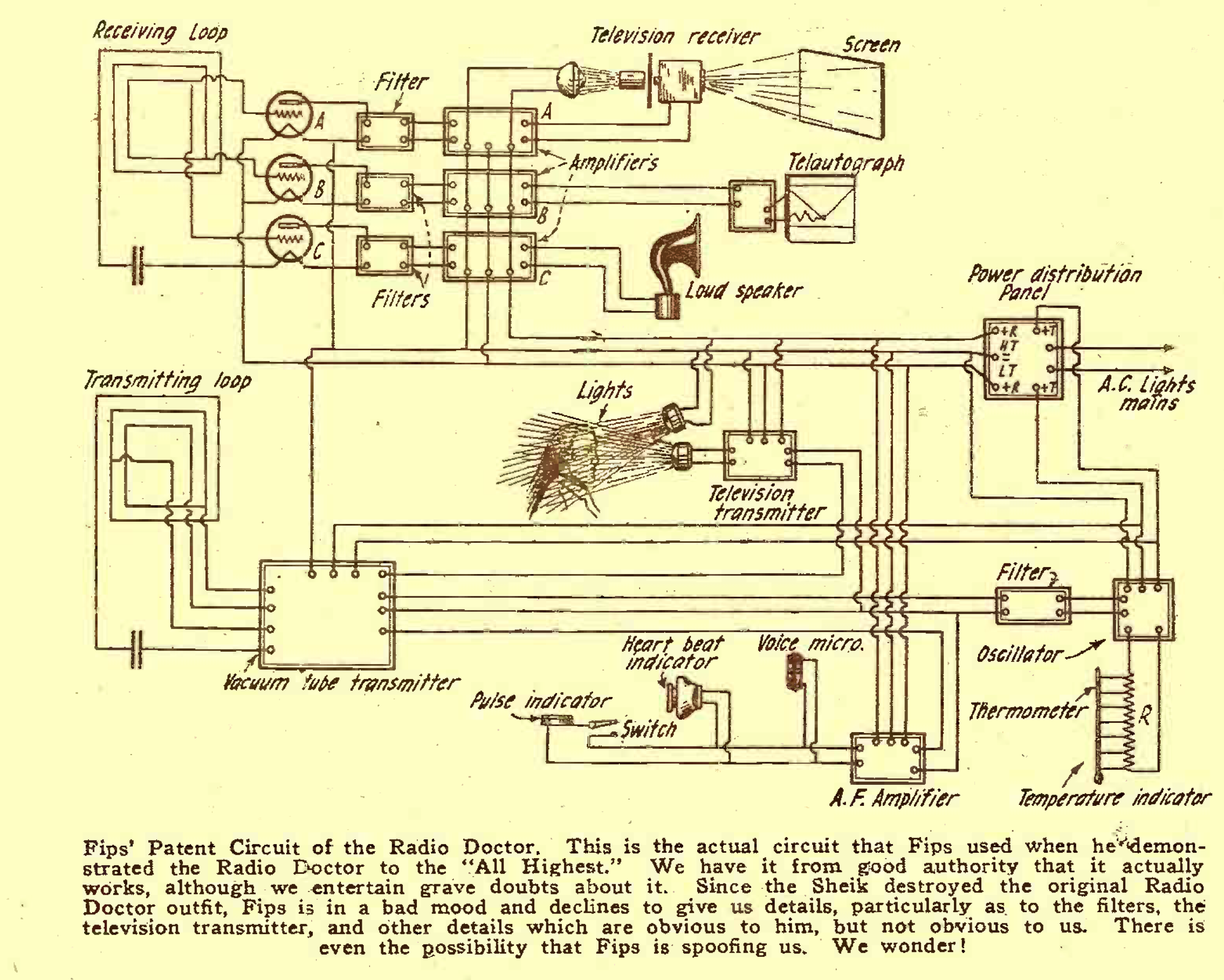

The invention of the telephone by Alexander Graham Bell in 1876 also rewrote the rules of medical practice, making remote consultations possible. However, it was with the advent of radio communications in the early 1900s that telemedicine came into its own. The earliest inkling of what lay ahead came from a comical fantasy in the April 1924 edition of Radio News in which an imperious cartoon character called The Caliph is introduced to the “ultra-latest radio marvel” — the Radio Doctor. A twist of the Telodial on the device’s monitor brings the beaming face of Dr. Hackensaw, counting and sorting microbes in distant New York. He listens to the Caliph’s heartbeats through a funnel-shaped Rastetoscop placed on his chest and pronounces the heartbeats sluggish. High Grace then inserts his arm into a socket for Dr. Hackensaw to inspect his pulse and sticks his tongue out to be checked. Next, wonder of wonders, a handwritten prescription emerges from the so-called Teleautographic attachment on one side of the Radio Doctor.

Modern telehealth has manifested each of these fantasies and gone beyond. Radio-based telehealth spread like wildfire. Within four years of that satire, in 1928, Australia pioneered the use of radio communication in telemedicine with the Aerial Medical Service, set up by Reverend John Flynn. Consultations and diagnoses used telegraph and radio communication, after which a qualified health professional would be flown down to the patient to continue treatment. By the time of the Korean and Vietnam wars, the US military had institutionalized telemedicine via radio as a prelude to dispatching medical teams and helicopters.

Like radio, television was a disruptor when it was invented in 1927 and soon changed the modalities of telemedicine. In barely 25 years, the Nebraska Psychiatric Institute was remotely monitoring patients using closed-circuit television. By 1959, the institute was delivering group and long-term therapy, consultation-liaison psychiatry and medical student training to Norfolk State Hospital 112 miles away. Five years later, in 1964, they established their first interactive, two-way video link. Telehealth — which goes beyond diagnosis, treatment and follow-up care to encompass clinical services and non-clinical aspects like education and administrative support — was officially online.

Radio News

Three years later, the first comprehensive telemedicine system was installed to connect Boston’s Logan Airport medical station to Massachusetts General Hospital. It demonstrated first-hand that not only accurate remote diagnoses were possible using interactive television but that X-rays, lab results and medical records could be successfully transmitted as well. It also established the template for a typical clinical telemedicine program: a medical center with one or more physicians, connected by two-way communication with several satellite clinics with nurses, nurse practitioners or physician assistants.

Telemedicine capabilities spread rapidly to disciplines like cardiology, dermatology, radiology and anesthesia.

Cardiology: The heart is one of the earliest organs where electric and electronic devices were pioneered in diagnostics and therapy. Basic metrics like a person’s arterial blood pressure and heart rhythm are easily acquired and can carry crucial, life-saving information electronically. Data from permanently implanted devices like cardiac pacemakers and defibrillators, which have been in use for decades, can easily be relayed to distant sites. Some are even Bluetooth-enabled now and can be monitored using a smartphone.

One of the most striking of these is a remotely operated defibrillator, a device familiar from ER scenes in hospital-based teleserials. The patient unit of the so-called trans-telephonic defibrillator (TTD) contains a microprocessor, microphone, electrocardiogram/defibrillator electrode pads and cellular telephone. It puts patients in instant touch with distant hospital-based physicians who can diagnose and monitor them, advise their caregivers and, where necessary, defibrillate them remotely, remaining in voice contact with the patient unit operator while fully controlling the defibrillator.

The system, approved for use by the U.S. Food and Drug Administration in 1987, was tested first on June 26, 1989, in the telemetry unit of Jewish Hospital in St. Louis, Missouri. Since then, the TTD has been tested in an urban setting in 211 calls where a physician responded from a mobile coronary care unit up to 15 miles distant. Voice and data were communicated satisfactorily in 172 of 211 calls (81.5%). Ventricular fibrillation or ventricular tachycardia was successfully corrected in 46 out of 48 episodes involving 22 patients, by administering 100 direct current shocks of 50 to 360 Joules.

Telemedicine has also profoundly transformed the prospects of babies with congenital heart disease, which is often diagnosed only after birth through echocardiography. Prognoses used to be bleak in remote settings far from expert cardiologists. However, a game-changing experiment in Canada in December 1987 successfully established tele-echocardiography as feasible, cost-effective and diagnostically useful for infants far from specialists. The Izaak Walton Killam (IWK) Children’s Hospital in Halifax, Canada, opened a 24-hour high-quality telephone link with the Saint John Regional Hospital in New Brunswick, about 310 miles away, to provide real-time supervision and interpretation by IWK’s specialist pediatric cardiologists on standby. In the first nine months, echocardiography with excellent image and sound quality of 18 children with a median age of five months was transmitted and examined in real-time and accurate diagnoses made. Congenital heart disease was found in 12 infants and other cardiac issues in two. Tele-echocardiology is the standard of care in pediatric cardiology today.

Anesthesia: Laser-mediated telemedicine came to anesthesia in 1974. Since this procedure requires expertise rather than manual dexterity, tele-anesthesia is possible with a remote anesthesiologist guiding clinical staff and technicians in the operating room while viewing the patient and the surrounding area on a color monitor, and manipulating the camera remotely.

How NASA’s STARPAHC boosted telemedicine

How far can a patient be from the doctor and still access telemedicine? What if the patient is not even on this planet? What if they were on the moon? Or halfway between Earth and Mars? Telemedicine’s biggest boost came from the National Aeronautical and Space Organization’s (NASA) efforts to understand and systematize medical support for its astronauts as they pushed deeper and deeper into space.

The environment of space is harsh and unprecedented, mirroring some of Earth’s most unforgiving regions with additional complications caused by the presence of microgravity. “Exposure to space disturbs most physiological systems and can precipitate space-specific illnesses such as cardiovascular deconditioning, acute radiation syndrome, hypobaric decompression sickness and osteoporotic fractures,” says Matthieu Komorowski, a consultant in intensive care and anesthesia at London’s Charing Cross Hospital. Brittle bones can break, blood can clot and astronauts with only basic medical skills can find themselves dealing with medical emergencies while millions of miles from home and with only limited medical supplies on board. NASA realized that telemedicine was their best recourse.

Since 2001, when the International Space Station (ISS) was launched, NASA’s Human Health and Performance team has been developing and honing its expertise to keep human beings healthy 250 miles above the earth and moving at 17,500 miles per hour, and beyond that in interplanetary space. The story of how NASA dealt with the first-ever blood clot in outer space is illustrative.

Dr. Stephan Moll, professor of medicine at the University of North Carolina (UNC) School of Medicine at Chapel Hill, was enjoying a Sunday morning in late 2019 with his family when the phone rang. “It’s for you,” said his wife. “It’s from outer space.”

Dr. Moll had already received an email from NASA requesting an urgent conversation. During a routine vascular examination of 11 personnel, an astronaut [name and details withheld] had been found to have a clot, called deep vein thrombosis (DVT), in the jugular vein. The question was whether to start him on blood thinners, which might sound like a straightforward issue but was not.

“If you start a blood thinner, you’re at higher risk for bleeding,” says Dr. Moll. “If you don’t start one, there’s a higher risk of the clot growing and perhaps leading to a pulmonary embolism. Because this was the first blood clot ever detected in an astronaut, there was no experience about what to do and no knowledge about possible complications.” All decisions were based on extrapolating from Earth procedures to predict what might happen in the zero gravity of space.

Moll and his team prescribed an injectable blood thinner, Enoxaparin, but a new problem arose: only a limited amount was in stock on board the ISS. Guided by Moll, NASA optimized the Enoxaparin treatment, spreading it over about 40 days. On the 43rd day, NASA’s next cargo mission arrived with a supply of Apixaban, a pill taken orally. NASA monitored the blood clot closely during the 90 or so days of treatment through images from ultrasound scans performed by the astronaut with guidance from the radiology team on Earth. When the six-month mission ended, the astronaut was still asymptomatic and the blood clot required no further treatment.

Incidents like this made it clear that NASA urgently needed an earthbound testing site for developing and pretesting a telehealth system that could serve the healthcare needs of humans in space. Out of this thinking grew NASA’s groundbreaking telemedicine project in cooperation with the Indian Health Service and the Papago People of southern Arizona: Space Technology Applied to Rural Papago Advanced Health Care, or STARPAHC. The project was carried out in the 1970s in a sparsely populated Indian reservation in southwestern Arizona stretching about 4,300 square miles. For the 10,000 or so Papago Indians who lived here in 75 small villages, access to medical care was a dire issue, often requiring most of a day just to reach a clinic.

STARPAHC was configured around a fully-equipped central medical service at the Sells Indian Hospital integrated through telecommunications, telemetry and a computerized data system to a fixed satellite clinic at Santa Rosa, a mobile health clinic and a hospital-based specialty referral center at Phoenix.

STARPAHC demonstrated conclusively that high-quality healthcare could be delivered to remote and underserved communities even via non-physician personnel, provided the communication technology used was robust and user-friendly. However, detailed planning and collaboration between technology providers, healthcare professionals and the beneficiaries was key to success.

How the pandemic accelerated telemedicine

About half a century after STARPAHC, telemedicine rules changed drastically and unexpectedly in March 2020 with the COVID-19 pandemic. Till then telehealth had remained a fringe area with high potential but limited popular appeal. A survey of healthcare providers and consumers in 2017 showed that 82% of Americans had not used telehealth services, though it was rated highly by the few who had. COVID-19 turned everyone into a remote patient.

The pandemic caught medical services unprepared, leaving hospital wards filled to overflowing and putting frontline workers under enormous pressure. While they struggled to keep up, scientists worked round the clock to understand the rapidly evolving virus and develop cures and vaccines. The combination of a deadly, highly transmissible virus and misinformation created a predicament for caregivers and patients: how to continue providing healthcare without getting infected. Telehealth quickly emerged as an effective, sustainable all-purpose solution, recommended by doctors and hospitals, and helping bridge the gap between people, physicians and health systems. Hospitals began using telehealth to treat quarantined patients infected with COVID-19.

The U.S. government relaxed restrictions on Medicare’s telehealth policy to allow beneficiaries from any location to access services from home. The U.S. Health and Human Services (HHS) also waived the enforcement of the Health Insurance Portability and Accountability Act (HIPAA) for telemedicine so that health providers could use more familiar platforms like Facebook and Zoom. By 2021, public use of telehealth services had stabilized at a level 38 times higher than before the pandemic.

The Utah-based Intermountain Healthcare took the pressure off their emergency rooms and freed up hospital beds with a remote patient-monitoring COVID-19 program that combined telemedicine and home monitoring. Patients who tested positive or were suspected of having COVID-19 and displaying non-life-threatening symptoms were sent home with a Bluetooth pulse oximeter paired with their smartphone. Their blood oxygen levels, measured daily for two weeks, were transmitted to a centrally located nurse care team. If the levels seemed low, the next step would be a clinical evaluation via phone or video. Only those with worsening conditions would be called to the emergency room.

In July 2021, with the pandemic waning, a review of telehealth by McKinsey showed stable improvements in perceptions, attitudes and acceptance of telehealth among consumers and providers, though concerns about privacy and security remained. Investment in virtual care and digital health had tripled from its pre-pandemic levels. In the first half of 2021, $14.7 billion of venture capital poured into digital health, nearly twice the investment two years earlier.

Kaiser Permanente, one of the early adopters of telehealth technology and processes, transformed its approach to the management of chronic diseases such as blood pressure, achieving a control rate above 90%. Traditional BP management is intermittent and sporadic, with intervals as long as six months when the patient is unmonitored. For many, a monthly visit to a doctor just to get their blood pressure checked is expensive and time-consuming. Kaiser Permanente uses telehealth to step up the frequency of remote disease measurement and timeliness of treatment, effectively keeping a nearly continuous watch on its patients’ vitals. As a result, Kaiser Permanente members in California and the mid-Atlantic region are 14% less likely to die from stroke and 43% less likely to die from heart disease than Americans as a whole.

Remote Patient Monitoring (RPM), one of the strategies used to provide healthcare during COVID-19, came into its own after the pandemic, giving providers a faster, more reliable read on patients, especially those with chronic conditions such as diabetes and hypertension. The use of RPM devices decreased hospitalizations by 65% and emergency department visits by 44% for patients with Chronic Obstructive Pulmonary Disease.

As telehealth gained acceptance and grew, something quite extraordinary and unprecedented was unfolding in the world of computer science. A question that philosophers and scientists had played with for centuries was finally being settled: Can machines think? No one was quite prepared for the answer or its implications.

The rise of Artificial Intelligence (AI)

In 1950, the English mathematician, computer scientist and logician Alan Turing suggested a simple way to determine if a computer could think like a human or not. Rather than adopt the traditional approach of defining the words machine and think, he changed the question to: Can machines do what we do? Turing’s test was based on the Imitation game, a party activity in which a player has to guess the gender of two people out of sight by studying their typewritten answers to questions. Turing proposed that if the two hidden participants were a human and a computer, and a player could not tell them apart from their printed answers, then we would have to conclude that the computer had responded like a human. Over the decades, the Turing test in its various forms has remained definitive for evaluating human-like machine ‘thinking’.

Ways to make machines learn and process knowledge are at the heart of artificial intelligence. From the 1950s, two systems developed in parallel, expert systems and neural networks. Only one of them would grow and dominate the field of AI.

An expert system is like a super-smart digital assistant that knows everything about a specific topic, such as suggesting diagnoses by analyzing a patient’s symptoms and lab results. The computer is ‘trained’ using data acquired from real experts such as doctors and scientists and organized into a framework such as If-Then rules which mimic human reasoning. The computer uses an ‘inference engine’ to evaluate problems logically and suggest solutions. By design, these systems are only as ‘expert’ the data and rules they have been fed.

One of the earliest expert systems was Mycin, introduced around 1965 by the Stanford Heuristic Programming Project led by Edward Feigenbaum, also known as the “father of expert systems”. Mycin was trained to suggest diagnoses for infectious diseases by studying the patient’s symptoms and lab test results. Other expert systems included Dendral, trained to identify unknown organic molecules; Internist, developed by Harry Pople at the University of Pittsburgh in the early 1970s to aid in diagnoses of internal medicine; Caduceus, an evolution of Internist developed in the mid-1980s that could diagnose up to 1,000 diseases and was described as “the most knowledge-intensive expert system in existence”.

In 1959, the term machine learning entered the conversation, referring to computers that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. The term was coined by Arthur Samuel, an IBM employee and a pioneer of computer gaming and artificial intelligence.

The artificial neural network (ANN), a type of machine learning model that can detect patterns and make predictions as humans do, was inspired by the biological neural networks in animal brains and came out of efforts to mimic them. Some examples of ANN in daily life are face recognition, speech recognition and self-driving cars, which can detect objects and make driving decisions.

The age of artificial intelligence was born in 1956 at the Dartmouth Summer Research Project on Artificial Intelligence, a workshop widely considered to have established AI as a field. It brought together Claude Shannon, best known as the father of information theory; and computer and cognitive scientists John McCarthy, Nathaniel Rochester and Marvin Minsky, regarded as some of the founding fathers of AI. The words artificial intelligence were first used in the workshop proposal they submitted in September of the previous year, including these prescient words: “An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.”

Since everything a computer does is implicitly artificial, a sharp observer may ask if there was a specific occasion when a computer did something disturbingly human-like. That moment came 61 years after the Dartmouth Summer Research Project. It was neither planned nor expected.

Attention is all you need: The Transformer Story

Some say that the title of the epoch-making 2017 paper titled Attention is all you need was a capricious nod to the Beatles song All you need is love. Authored by a young and diverse team of Google scientists, the landmark paper introduced a new deep learning architecture known as the transformer, a model that excels in handling sequence-to-sequence tasks such as language translation, text generation and understanding images. The attention mechanism is like a student studying a long textbook chapter. He doesn’t need to remember every word. Instead, he finds key points as he skims through the pages and highlights important explanatory sentences. When answering a question, he will refer back to these. Similarly, a transformer assigns importance or weightages to words in a sentence by matching and comparing them with its enormous language database. Somehow, this helps it grasp the intent and meaning of the input, and frame an appropriate and meaningful response.

The Eureka moment came one day when Łukasz Kaiser, the Google team’s supervisor, fed the transformer nearly half of Wikipedia, instructing it to analyze it and create five Wikipedia entries titled ‘The Transformer’. To his utter astonishment, the transformer dreamed up five shockingly credible, totally fictitious articles.

A Japanese hardcore-punk band called The Transformer, formed in 1968.

A science-fiction novel called The Transformer by a fictional writer named Herman Muirhead.

A video game called The Transformer created by the (real) game company Konami.

A 2013 Australian sitcom called The Transformer.

And The Transformer, the second studio album from Acoustic, a non-existent alternative metal group.

Though full of glitches and contradictions, the writing was confident and authoritative, with granular detail. For example, there was a lengthy history of the punk band: “In 2006 the band split up and the remaining members reformed under the name Starmirror.”

The Google team was stunned. Where were the details coming from? How did the model decide what details were appropriate to the topic? Most of all, how was a neural network built to translate text waxing eloquent with human-like prose, seemingly out of thin air?

Aidan Gomez, one of the team members, said, “I thought we would get to something like this in 20 or 25 years, and then it just showed up.” It felt like digital sorcery; no one had a clue how the magic was happening. The machine had gone beyond what its makers intended.

By leveraging AI, we can expand access to rare disease expertise and greatly reduce time to diagnosis — from years to months or even days.

Scott Gorman, RAPID Program Manager

When AI met TM

The marriage of AI with telemedicine was inevitable in an age of big data and neural networks. How big exactly is big data? The healthcare industry is expected to generate approximately 2.5 zettabytes of data globally this year, marginally more than last year’s 2.3 zettabytes. A zettabyte is such an epic number that it can only be understood by analogy: if a gigabyte were a cup of coffee, a zettabyte would be roughly the entire Pacific Ocean filled with coffee. Processing such a universe of data and gleaning patterns and insights from it would be impossible without AI.

AI transforms health-seeking from an ordeal to a convenience for a busy city-dweller and a boon for those with mobility issues or living in remote areas. A few taps of a finger can schedule a consultation, and visiting a physician becomes as effortless as sitting before a TV. Around 75% of healthcare organizations have found that integrating AI into their operations improved their ability to treat diseases effectively while reducing staff burnout.

Since physical examinations contribute to only 11% of the diagnostic process, with the patient’s history making up 76%, AI has become a valuable tool for helping medical professionals assess and interpret patient data more efficiently. AI algorithms can rapidly process large datasets, allowing medical professionals to identify potential health risks early – often before they are detectable by traditional methods.

Telehealth and telemedicine is a booming market, projected to grow at a compound annual growth rate of 23.2% between 2023 and 2028 as technology advances, regulations evolve, and patients and healthcare professionals accept telemedicine as a safe, economical and viable choice. AI is dramatically re-drawing the telehealth landscape in the areas of prediction, diagnosis, treatment and monitoring of diseases like heart disease, cancer, respiratory disorders and diabetes, which account for nearly 75% of deaths worldwide each year.

Prediction and diagnosis

A striking demonstration of AI’s potential to predict disease came exactly a month after the first COVID-19 patient was detected in China. On December 31, 2019, there was a sharp 300% spike in the number of Internet queries using the keywords ‘epidemic’, ‘masks’ and ‘coronavirus’ on Baidu, China’s largest and the world’s second-largest search engine, covering 696 million Chinese then. Nearly one-fifth of those queries originated in Hubei province; half of those came from Wuhan city. Who was behind these searches? Were they people worried about their symptoms? Relatives of infected people? Could the number of searches be used to predict the number of cases?

To find out, researchers trained an AI model called a Graph Convolutional Network, using 444 million search queries to select 32 keywords. Based on search queries during the Beijing COVID-19 outbreak in Xinfadi, a major food market, this model predicted the number of cases with an accuracy of 79%, days before estimates from traditional monitoring came in. The more people searched with these keywords, the more cases were reported, usually about two days later.

Early diagnosis is already an area where AI is outperforming medical experts, with its ability to catch consequential diseases while they are nascent. Pranav Rajpurkar, Assistant Professor of Biomedical Informatics at the Harvard Medical School, believes that AI may soon be capable of zero-error diagnoses. “I think there can be a world in which, because of AI, we don’t make medical errors and in which no disease is missed,” he says.

The most promising area so far is its potential to improve the speed and accuracy of the interpretations of diagnostic scans. Axel Heitmueller, CEO of Imperial College Health Partners in the UK, says AI systems already read X-rays, and MRI and CAT body scans “perhaps more consistently than humans can”.

Heart disease: AI-based techniques are rapidly changing how heart disease is predicted, diagnosed and monitored, and in some cases even forecasting when death could come due to heart conditions. AI has helped clean up electrocardiograms, filtering out noise and improving their accuracy. A system evocatively named the ‘Health Fog’ collects heart-related data through bio-sensors and processes it using deep learning and edge computing devices to deliver insights and diagnoses to healthcare providers in real-time. Another system, which tracks vital signs like blood pressure, heart rate and temperature to predict heart disease, is cloud-based and can be accessed by doctors and patients wherever they are.

In one study that looked at real-world applications for predicting heart diseases, a cloud-based system used machine learning models to analyze patient data, including smartphone-based monitoring, and predicted heart disease with 97.53% accuracy. Another study used a deep learning model to categorize heart sensor data into normal and abnormal states, achieving an accuracy of 98.2%.

Cancers: AI has shown accuracy exceeding 90% in identifying certain cancers. One system used a machine learning technique to classify breast cancer with 99% accuracy and 98% sensitivity. An AI model that analyzed images to detect skin cancer achieved 96.805% accuracy.

Ovarian cancer is another disease where AI is making a difference. An early indication of ovarian cancer is a lesion, often an incidental finding during a medical exam. A misdiagnosis can lead to unnecessary procedures or precious time lost. A recent study trained an AI neural network model using 17,119 ultrasound images from 3,652 patients across 20 medical centers in eight countries. When finally put to work, the AI model consistently predicted or identified ovarian cancer correctly, avoiding false positives. In a simulation, it drove down expert referrals by 63% while improving diagnostic accuracy.

Diabetes: AI is emerging as a powerful tool for the early detection, prediction and management of diabetes. Several studies have applied machine learning techniques to analyze factors like body weight, medications and sleep patterns to predict blood sugar levels, an indicator of diabetes risk and crucial for preventing complications like blindness. Some have achieved accuracy rates as high as 95%, and a study using deep learning was 95.7% accurate in predicting diabetes based on heart rate data.

Tuberculosis: AI and machine learning are emerging as great tools for detecting and diagnosing tuberculosis by analyzing chest X-rays and medical images, automated microscopy and predictive modeling. One system scanned and analyzed 531 slides, correctly identifying TB in 40 out of 56 positive samples. AI has recognized TB with 82% accuracy in chest X-rays from Chinese hospitals. Another system went beyond TB detection to identify conditions like fibrosis and pleural effusion using 800 chest X-rays from public datasets. An AI-driven computer program can now detect TB bacteriums in microscopic smear images with 78.4% accuracy and even predict how patients might respond to different TB medications.

Alzheimer’s disease: AI’s accuracy has been between 98.8% and 99.95% in predicting Alzheimer’s disease in its early stages, working with neuroimaging data, electronic medical records and speech data.

Treatment and monitoring

AI is already ushering in an age of personalized medicine by analyzing large datasets to understand the unique characteristics of individual patients and even predict how they will respond to specific treatments. Patients could expect personalized treatment plans based on their genetic makeup, lifestyle and other factors.

Remote monitoring was already a rapidly growing aspect of telemedicine, but it came of age and became an imperative during COVID-19. Remote-controlled telepresence robots with built-in AI and vision systems for navigating around obstacles are becoming common sights in hospital corridors. Thanks to a built-in monitor and a software interface, they have proved to be viable alternatives to face-to-face interactions and even medical examinations. For example, the Dr. Rho Medical Telepresence Robot features an intuitive vision system that instructs the cameras to follow the movements and gestures of the doctor and a micro-projector for collaborative checks and procedures.

Monitoring infected or sick patients without picking up the contagion was a unique challenge of COVID-19. A controlled study at Queen Mary Hospital, Hong Kong, found that wearable health monitors, when combined with artificial intelligence, can help doctors detect early signs of worsening health in people with mild COVID-19 even before serious symptoms appear. A gender-balanced group of 34 patients was admitted to an isolation ward where their vital signs — such as heart rate and oxygen levels — were checked 1,231 times over 15 days using traditional manual methods. The results were nearly identical to data collected concurrently through wearable devices — heart rate readings matched 96% of the time; oxygen levels, 87%.

Researchers also used AI to generate a health score called the Biovitals Index, which reflected a patient’s overall condition based on their symptoms, vital signs and other medical information including the viral load. The AI-based system correctly identified patients whose condition might worsen 94% of the time and avoided false alarms 89%. It correctly estimated who needed a longer hospital stay two-thirds of the time.

AI-powered devices and sensors can collect data on vital signs such as heart rate, blood pressure, and glucose levels, detect anomalies and predict potential health issues before they become critical, and alert remote healthcare providers in real-time.

Administration

A study by the American College of Physicians found that doctors spend half or more of their time on paperwork to maintain and analyze patient records, or take a patient’s medical history, tasks ideal for a chatbot in an AI-driven world. Patient data can also be streamed to clinicians in real-time through wearable and implantable devices, improving remote patient monitoring. Approximately 41% of Americans wear some smart device that continuously monitors their vital signs and other health indicators. Statista estimates that there are already around 300 million smart homes globally, further easing the integration of telehealth services with smart home devices and AI.

One of the early AI-driven solutions to emerge was Remedy, founded by a group of MIT and Princeton alumni, to offer affordable access to doctors. Their automated, chatbot-like medical assistant, Remy, combines chat with a structured questionnaire to capture patient history and complaints and sends them in summary form to a remote Remedy-associated physician for diagnosis and treatment. The patient can converse with the doctor through the chat interface and even transmit photos, videos and documents. The entire visit, right up to the prescription, can be virtual. The Remedy system can triage about 70% of the cases that come to an emergency or primary care clinic.

AI’s machine learning algorithms can process vast amounts of patient data ranging from medical histories to diagnostic images and accurately catch patterns and anomalies that a human expert might miss. Such systems are revolutionizing how medical data are collected, analyzed and used, leading to more personalized, efficient and effective healthcare.

The Future

Humankind is at the cusp of a new healthcare paradigm in which the formidable power of AI is harnessed to improve and accelerate healthcare delivery exponentially, whether you live a block away or on the other side of the planet. New technologies create new opportunities, some of them difficult to anticipate, some of them startling in their benefits. We can make a few guesses.

Space will lead the way. Outer space will always be where we explore new frontiers, pushing the envelope. Whenever a manned Mars mission finally happens, the distances at which astronauts’ health must be monitored will increase like never before, as will latency in communication. While messages between the Earth and the Moon, only 384,000 km away, require about 1.3 seconds, Mars is between 55 and 378 million miles away. A message can take as long as 21 minutes to go one way. Bridging this gap will foster new strategies and technologies.

Rare diseases will be detected faster, They’re called rare but rare diseases are pretty common — and difficult to diagnose. Over 10,000 unique conditions affect over 350 million people worldwide. Diagnosis can be protracted, inconclusive and take years. The RAPID program (Rare disease AI/Machine Learning for Precision Integrated Diagnostics) of the US government’s Advanced Research Projects Agency for Health (ARPA) is taking aim at this very problem. “By leveraging AI, we can expand access to rare disease expertise and greatly reduce time to diagnosis — from years to months or even days,” said RAPID Program Manager Scott Gorman. “AI-enabled support tools combined with confirmatory testing such as whole genome sequencing allow us to sift through the ‘haystack’ of patient data more efficiently and pinpoint the ‘needles’ of rare diseases.”

Drones will rule. Drones, or unmanned aerial vehicles (UAV), are already the busy bees of numerous systems from surveillance and military combat to retail delivery. To test whether they can be deployed to deliver medical help in an emergency, a drone-based system was tested with a simulated accident in a remote area involving participants without any medical background. The experiment demonstrated that drones equipped with diagnostic tools can rapidly deliver testing kits such as those for COVID-19, malaria or HIV to remote areas as well as bring samples back to the lab for analysis. Even better, drones can carry advanced portable diagnostic devices capable of measuring temperature or blood glucose levels directly in the field.

A preview of the future of AI in telemedicine is taking shape in a remote, windblown island of 138 people. Although it is only about 38 minutes by ferry from Louisburgh in Ireland’s County Mayo, Clare Island is impossible to approach on many days of the year because of unpredictable rough weather that leads to the ferry being canceled. The island did not even have electricity until the 1980s. Meeting the small community’s healthcare needs has long been a challenge, worsened by the island’s low internet connectivity.

These were the reasons why they picked Clare Island, says Professor O’Keeffe, the University of Galway physicist behind a pioneering Healthy Islands project launched towards the end of 2022, to serve as a model for delivering AI-enabled telehealth to remote settings anywhere.

Global technology giant Cisco, a member of the public-private partnership, set up sturdy, private 5G connectivity in a terrain hostile to fiber optic cables. Air Taurus supplied rugged, autonomous Eiger drones capable of carrying 3 kg of medical supplies and flying at 75 mph over challenging terrain in whimsical weather. A robotic all-terrain delivery dog, Madro, stood by for emergencies.

Participating residents wore Withings Pulse HR watches which tracked their vital signs such as pulse, temperature and heart health continuously and shared them in real time with the clinic on the mainland. Any anomaly of concern — such as tachycardia — would immediately trigger a meeting via two-way web radio and an emergency intervention if necessary. If a prescription had to be filled, a drone would fly in with medications and other supplies.

The era where the doctor went to the patient or vice versa is over. AI and telehealth are propelling us into a more reliable, speedy and efficient world where a doctor can personalize high-quality health care for each patient in ways not possible before — without even leaving the clinic.

Many would call that a game-changer.